Introduction

Have you ever clicked a button on a website and nothing happened? Or maybe an app closed by itself? These are bugs – small mistakes in software. But while they may look minor, the effect they can have on a business is massive.

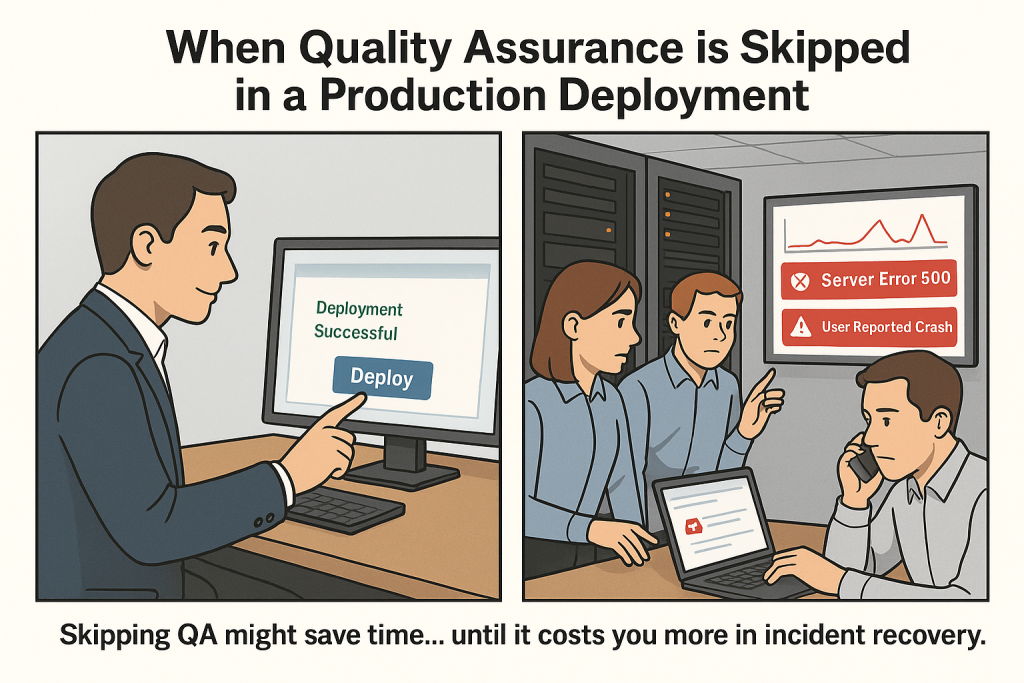

In this blog, we’ll show how a simple bug can lead to money loss, angry customers, and even business failure.

💥 What is a Bug?

A bug is a problem in a computer program that makes it behave the wrong way. For example:

- A payment page doesn’t load

- A mobile app crashes

- A wrong price shows up in your cart

These issues may look technical, but they cause serious business problems.

📊 How Bugs Affect Business

Let’s take a look at this chart:

Explanation:

- Revenue Loss: If people can’t pay, the business loses money.

- Brand Damage: Users lose trust in your product.

- Customer Churn: Frustrated customers leave.

- Operational Cost: More time and money spent fixing bugs.

- Compliance Risk: Bugs in sensitive systems can lead to legal trouble.

🧾 Real-Life Bug Disasters

🚨 Knight Capital (USA)

In 2012, a bug in their trading software cost them $440 million in 45 minutes. The company never recovered.

🛒 Amazon Sellers (UK)

A pricing error caused products to be listed for £0.01. Some sellers lost their entire stock for almost nothing.

📱 Facebook Ads

In 2020, advertisers were charged extra due to a system bug. Facebook had to issue refunds and lost trust for a while.

🧠 Not All Bugs Are Equal

Some bugs are small. Some are dangerous. Let’s compare:

| Bug | Looks Small? | Business Risk |

|---|---|---|

| App crashes once | Yes | Low |

| Submit button doesn’t work | Maybe | High |

| Wrong tax added | No | Very High |

✅ How to Prevent Serious Bug Impact

- Test Early: Don’t wait until launch. Start testing when development begins.

- Automate Testing: Use tools to test common features every time you update.

- Talk with Business Teams: Developers should understand which parts of the app matter most for business.

- Fix Fast, Learn Faster: When a bug happens, fix it quickly and learn from it.

Here is a simple bar graph showing how software bugs can affect different areas of a business. The higher the bar, the greater the impact.

🎯 Final Words

Even one small bug can damage a brand or cost a company millions. That’s why businesses must treat bugs seriously – not just as a technical problem, but a business threat.

👨💻 Remember: A bug in software is a hole in your business.